Supported Configurations

Volume Shadowing for OpenVMS provides data availability across the full range of configurations — from single nodes to large OpenVMS Cluster systems — so you can provide data availability where you require it most.

There are no restrictions on the location of shadow set members beyond the valid disk configurations defined in the SPDs for the OpenVMS operating system and for OpenVMS Cluster systems:

If an individual disk volume is already mounted as a member of an active shadow set, the disk volume cannot be mounted as a standalone disk on another node.

You can mount a maximum of 500 disks in two- or three-member shadow sets on a standalone system or in an OpenVMS Cluster system.

A limit of 10,000 single member shadow sets is allowed on a standalone system or on an OpenVMS Cluster system. Dismounted shadow sets, unused shadow sets, and shadow sets with no bitmaps allocated to them are included in this total. These limits are independent of controller and disk type. The shadow sets can be mounted as public or private volumes.

Starting with OpenVMS Version 7.3, the SHADOW_MAX_UNIT system parameter is available for specifying the maximum number of shadow sets that can exist on a node. For more information about SHADOW_MAX_UNIT, see “Volume Shadowing Parameters ” and “Guidelines for Using Volume Shadowing Parameters”.

OpenVMS Version 8.4 supports six-member shadow sets as compared to the previous three-member shadow sets. This is useful for multisite disaster tolerant configuration. In a three-member shadow set, a three-site disaster tolerant configuration has only one shadow member per site. In this scenario, when two sites fail, the member left out in the surviving site becomes a single point of failure. With six-member shadow set support, you can have two members of a shadow set in each of the three sites providing high availability.

For example:

|

All systems that are going to mount a shadow set using "Extended Memberships" (up to six members) must be on OpenVMS Version 8.4 . If the systems that have the virtual unit mounted are not "Extended Memberships" capable, then any attempt to mount more than three members fails. If a system that is not capable of "Extended Memberships" tries to MOUNT a virtual unit that is using "Extended Memberships" on other nodes, the MOUNT command fails. Once the virtual unit is enabled to use "Extended Memberships", the characteristic is maintained until the virtual unit is dismounted clusterwide, even if the membership is reduced to less than four members. This feature is not ported to VAX. The virtual unit characteristic voting insures compatibility. If an Alpha or Integrity server disk is mounted without the new feature, then the virtual unit can also mount on the VAX.

A new area of the Storage Control Block (SCB) of disk is used to store the extended membership arrays. Therefore, an attempt to MOUNT a six member shadow set on a previous version works only if the members are specified in the command line (maximum of three members). The $MOUNT/INCLUDE qualifier in previous versions fails to find the new membership area in the SCB and therefore it does not include any other former members.

You can shadow system disks as well as data disks. Thus, a system disk need not be a single point of failure for any system that boots from that disk. System disk shadowing becomes especially important for OpenVMS Cluster systems that use a common system disk from which multiple computers boot. Volume shadowing makes use of the OpenVMS distributed lock manager, and the quorum disk must be accessed before locking is enabled. Note that you cannot shadow quorum disks.

Integrity server and Alpha systems can share data on shadowed data disks, but separate system disks are required — one for each architecture.

If you use a minicopy operation to return a member to the shadow set and you are running OpenVMS Alpha Version 7.2–2 or Version 7.3, you must perform additional steps to access the dump file (SYSDUMP.DMP) from a system disk shadow set. This section describes these steps.

Starting with OpenVMS Alpha Version 7.3–1, this procedure is not required because of the /SHADOW_MEMBER qualifier introduced for the System Dump Analyzer (SDA). SDA (referenced in step 2) is the OpenVMS utility for analyzing dump files and is documented in the OpenVMS System Analysis Tools Manual.

When the primitive file system writes a crash dump, the writes are not recorded in the bitmap data structure. Therefore, perform the following steps:

Check the console output at the time of the system failure to determine which device contains the system dump file.

The console displays the device to which the crash dump is written. That shadow set member contains the only full copy of that file.

Assign a low value to the member to which the dump is written by issuing the following command:

$ SET DEVICE/READ_COST=nnn $allo_class$ddcu

By setting the read cost to a low value on that member, any reads done by SDA or by the SDA command COPY are directed to that member. HP recommends setting /READ_COST to 1.

After you have analyzed or copied the system dump, you must return the read cost value of the shadow set member to the previous setting --- either the default setting assigned automatically by the volume shadowing software or the value you had previously assigned. If you do not, all read I/O is directed to the member with the READ_COST setting of 1, which can unnecessarily degrade read performance.

To change the READ_COST setting of a shadow set member to its default value, issue the following command:

$ SET DEVICE/READ_COST=0 DSAnnnn

On each Integrity server system disk, there can exist up to two File Allocation Table (FAT)s partitions that contain OpenVMS boot loaders, Extensible Firmware Interface (EFI) applications and hardware diagnostics. The OpenVMS bootstrap partition and, when present, the diagnostics partition are respectively mapped to the following container files on the OpenVMS system disk:

SYS$LOADABLE_IMAGES:SYS$EFI.SYS

SYS$MAINTENANCE:SYS$DIAGNOSTICS.SYS

The contents of the FAT partitions appear as fsn: devices at the console EFI Shell> prompt. The fsn: devices can be directly modified by the user command input at EFI Shell> prompt and by the EFI console or EFI diagnostic applications. Neither OpenVMS nor any EFI console environments that might share the system disk are notified of partition modifications; OpenVMS and console environments are unaware of console modifications. You must ensure the proper coordination and proper synchronization of the changes with OpenVMS and with any other EFI consoles that might be in use.

You must take precautions when modifying the console in configurations using either or both of the following:

OpenVMS host-based volume shadowing for the OpenVMS Integrity server system disk

Shared system disks and parallel EFI console access across Integrity server environments sharing a common system disk

You must preemptively reduce the OpenVMS system disk environments to a single-member host-based volume shadow set or to a non-shadowed system disk, and you must externally coordinate access to avoid parallel accesses to the Shell> prompt whenever making shell-level modifications to the fsn: devices, such as:

Installing or operating diagnostics within the diagnostics partition.

Allowing diagnostics in the partition (or running from removable media) to modify the boot or the diagnostic partition on an OpenVMS Integrity server system disk.

Modifying directly or indirectly the boot or the diagnostics partition within these environments from the EFI Shell> prompt.

If you do not take these precautions, any modifications made within the fsn: device associated with the boot partition or the device associated with the diagnostic partition can be overwritten and lost immediately or after the next OpenVMS host-based volume shadowing full-merge operation.

For example, when the system disk is shadowed and changes are made by the EFI console shell to the contents of these container files on one of the physical members, the volume shadowing software is unaware that a write is done to a physical device. If the system disk is a multiple member shadow set, you must make the same changes to all of the other physical devices that are the current shadow set members. If this is not done, when a full merge operation is next performed on that system disk, the contents of these files might regress. The merge operation might occur many days or weeks after any EFI changes are made.

Furthermore, if a full merge is active on the shadowed system disk, you must not make changes to either file using the console EFI shell.

To suspend a full merge that is in progress or to determine the membership of a shadow set, see Chapter 8.

The precautions are applicable only for the Integrity server system disks that are configured for host-based volume shadowing, or are configured and shared across multiple OpenVMS Integrity server systems. Configurations that are using controller-based RAID, that are not using host-based shadowing with the system disk, or that are not shared with other OpenVMS Integrity server systems, are not affected.

To use the minicopy feature in a mixed-version OpenVMS Cluster system of Integrity server and Alpha systems, every node in the cluster must use a version of OpenVMS that supports this feature. Minicopy is supported on OpenVMS Integrity server, starting with Version 8.2 and on OpenVMS Alpha, starting with Version 7.2-2.

Shadow sets also can be constituents of a bound volume set or a stripe set. A bound volume set consists of one or more disk volumes that have been bound into a volume set by specifying the /BIND qualifier with the MOUNT command. “Shadowing Disks Across an OpenVMS Cluster System ” describes shadowing across OpenVMS Cluster systems. “Striping (RAID) Implementation” contains more information about striping and how RAID (redundant arrays of independent disks) technology relates to volume shadowing.

The host-based implementation of volume shadowing allows disks that are connected to multiple physical controllers to be shadowed in an OpenVMS Cluster system. There is no requirement that all members of a shadow set be connected to the same controller. Controller independence allows you to manage shadow sets regardless of their controller connection or their location in the OpenVMS Cluster system and helps provide improved data availability and flexible configurations.

For clusterwide shadowing, members can be located anywhere in an OpenVMS Cluster system and served by MSCP servers across any supported OpenVMS Cluster interconnect, including the CI (computer interconnect), Ethernet (10/100 and Gigabit), ATM, Digital Storage Systems Interconnect (DSSI), and Fiber Distributed Data Interface (FDDI). For example, OpenVMS Cluster systems using FDDI and wide area network services can be hundreds of miles apart, which further increases the availability and disaster tolerance of a system.

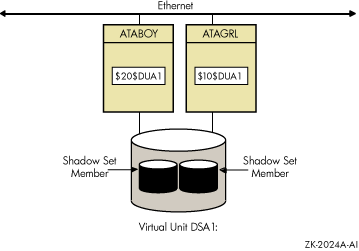

Figure 1-3 shows how shadow-set members are on line to local adapters located on different nodes. In the figure, a disk volume is local to each of the nodes ATABOY and ATAGRL. The MSCP server provides access to the shadow set members over the Ethernet. Even though the disk volumes are local to different nodes, the disks are members of the same shadow set. A member that is local to one node can be accessed by the remote node through the MSCP server.

The shadowing software maintains shadow sets in a distributed fashion on each node that mounts the shadow set in the OpenVMS Cluster system. In an OpenVMS Cluster environment, each node creates and maintains shadow sets independently. The shadowing software on each node maps each shadow set, represented by its virtual unit name, to its respective physical units. Shadow sets are not served to other nodes. When a shadow set must be accessed by multiple nodes, each node creates an identical shadow set. The shadowing software maintains clusterwide membership coherence for shadow sets mounted on multiple nodes. For shadow sets that are mounted on an OpenVMS Cluster system, mounting or dismounting a shadow set on one node in the cluster does not affect applications or user functions executing on other nodes in the system. For example, you can dismount the shadow set from one node in an OpenVMS Cluster system and leave the shadow set operational on the remaining nodes on which it is mounted.

The configuration requirements for enabling HBMM on an OpenVMS Cluster system are:

In a cluster of HP Integrity server and Alpha server systems, all Integrity server systems must be running OpenVMS Integrity servers Version 8.2 or later and all OpenVMS Alpha systems must be running OpenVMS Version 7.3-2 with the HBMM kit or Version 8.2 or later.

For more information on HBMM, see “Host-Based Minimerge (HBMM) ”.

Sufficient available memory to support bitmaps, as described in “Memory Requirements”.

The following restrictions pertain to the configuration and operation of HBMM in an OpenVMS Cluster system.

An HBMM-enabled shadow set can be mounted only on HBMM-capable systems. However, systems running versions of OpenVMS that support bitmaps can co-exist in a cluster with systems that support HBMM, but these systems cannot mount an HBMM-enabled shadow set. The following OpenVMS versions support bitmaps but do not include HBMM support:

OpenVMS Alpha Versions 7.2-2 through Version 7.3-2. (Version 7.3-2 supports HBMM only if the Volume Shadowing HBMM kit is installed.)

For OpenVMS Version 8.2, the earliest version of OpenVMS Alpha that is supported in a migration or warranted configuration is OpenVMS Alpha Version 7.3-2.

| CAUTION: The inclusion of a system, in a cluster, that does not support bitmaps turns off HBMM in the cluster and deletes all the existing HBMM and minicopy bitmaps. | |

HBMM can be used with all disks that are supported by Volume Shadowing for OpenVMS except disks on HSJ, HSC, and HSD controllers.

Host-based minimerge operations can only take place on a system that has an HBMM master bitmap for that shadow set. If you set the system parameter SHADOW_MAX_COPY to zero on all the systems that have a master bitmap for that shadow set, HBMM cannot occur on any of those systems, nor can full merges occur on any of the other systems (that lack a master bitmap) on which the shadow set is mounted, even if SHADOW_MAX_COPY is set to 1 or higher.

Consider a scenario in which a merge is required on a shadow set that is mounted on some systems that have HBMM master bitmaps and on some systems that do not have HBMM master bitmaps. In such a scenario, the systems that do not have an HBMM master bitmap do not perform the merge as long as the shadow set is mounted on a system with an HBMM master bitmap. For information on how to recover from this situation, see “Managing Transient States in Progress”

HBMM is supported on OpenVMS Integrity servers Version 8.2 and on OpenVMS Alpha Version 8.2. HBMM is also supported on OpenVMS Alpha Version 7.3-2 with an HBMM kit.

HBMM does not require that all cluster members have HBMM support, but does require that all cluster members support bitmaps.

Earlier versions of OpenVMS that support bitmaps are on OpenVMS Alpha Version 7.2-2 and later.

After an HBMM-capable system mounts a shadow set, and HBMM is enabled for use, only the cluster members that are HBMM-capable can mount that shadow set.

Minicopy requires that all cluster members must have minicopy support. HBMM requires that all cluster members must support bitmaps; however, it is not necessary that they all support HBMM.

To enforce this restriction (and to provide for future enhancements), shadow sets using the HBMM feature are marked as having Enhanced Shadowing Features. This is included in the SHOW SHADOW DSAn display, as are the particular features that are in use, as shown in the following example:

|

Once a shadow set is marked as using Enhanced Shadowing Features, it remains marked until it is dismounted on all systems in the cluster. When you remount the shadow set, the requested features are reevaluated. If the shadow set does not use any enhanced features, it is noted on the display. This shadow set is available for mounting even on nodes that do not support the enhanced features.

Systems that are not HBMM-capable fail to mount HBMM shadow sets. However, if HBMM is not used by the specified shadow set, the shadow set can be mounted on earlier versions of OpenVMS that are not HBMM-capable.

If a MOUNT command for an HBMM shadow set is issued on a system that supports bitmaps but is not HBMM-capable, an error message is displayed. (As noted in “HBMM Restrictions ”, systems running versions of Volume Shadowing for OpenVMS that support bitmaps but are not HBMM-capable can be members of the cluster with systems that support HBMM, but they cannot mount HBMM shadow sets.)

The message varies, depending on the number of members in the shadow set and the manner in which the mount is attempted. The mount may appear to hang (for approximately 30 seconds) while the Mount utility attempts to retry the command, and then fails.

A Mount utility remedial kit that eliminates the delay and displays a more useful message may be available in the future for earlier versions of OpenVMS that support bitmaps.

When a shadow set is marked as an HBMM shadow set, it remains marked until it is dismounted from all the systems in a cluster. When you remount a shadow set, if it is no longer using HBMM, it can be mounted on earlier versions of OpenVMS that are not HBMM-capable.