This section describes how to check the firmware version of the flash memory of the FC storage device, how to obtain the latest copy of the IPF Offline Diagnostics and Utilities CD, and how to configure the boot device paths for the storage device.

Before you can boot on a FC device on OpenVMS Integrity server systems, the EFI bootable firmware of the flash memory of the FC HBA must be the latest supported revision.

IMPORTANT: If you have an entry-class Integrity servers, you can update the firmware yourself. If you have a cell-based Integrity servers, you must contact HP Customer Support to update the firmware for you. | |

To flash the memory of the FC HBA on an entry-class server, update the EFI driver and RISC firmware to the latest versions available. In addition, to enable the HBA factory default settings, update the NVRAM resident in the FLASH ROM on the HBA, if necessary.

To determine the most current supported versions of the RISC firmware and EFI driver, see the appropriate README text file provided on the latest, supported HP IPF Offline Diagnostics and Utilities CD. For a 2 GB FC device, locate this file by navigating to the \efi\hp\tools\io_cards\fc2 directory. To update the driver and firmware, you can use a script on the CD that updates the driver and firmware automatically. Use the following command in the directory previously mentioned:

For a 4 GB FC device, navigate to the fc4 directory (\efi\hp\tools\io_cards\fc4) to locate the README text file. To update the driver and firmware, use the following command (located in the fc4 directory:

You can also use the efiutil.efi utility located in either directory.

For instructions on obtaining the Offline Diagnostics and Utilities CD, see Section . For additional information about updating the bootable firmware of the FC device, see the Guidelines for OpenVMS Cluster Configurations.

You can determine the versions of the driver and RISC firmware currently in place on your Integrity servers in two ways: from the console during system initialization or by using the efiutil utility.

The driver and RISC firmware versions are shown in the booting console message that is displayed during system initialization, as in the following example. The RISC firmware version is indicated in the format n.nn.nnn.

HP 2 Port 2Gb Fibre Channel Adapter (driver n.nn, firmware n.nn.nnn)The driver and RISC firmware versions are also shown in the display of the efiutil info command:

fs0:\efi\hp\tools\io_cards\fc2\efiutil>infoFibre Channel Card Efi Utility n.nn (11/1/2004) 2 Fibre Channel Adapters found: Adapter Path WWN Driver (Firmware) A0 Acpi(000222F0,200)/Pci(1|0) 50060B00001CF2DC n.nn (n.nn.nnn) A1 Acpi(000222F0,200)/Pci(1|1) 50060B00001CF2DE n.nn (n.nn.nnn)

Obtain the latest copy of the IPF Offline Diagnostics and Utilities CD by either of the following methods:

Order the CD free of charge from the HP Software Depot site main page at:

http://www.hp.com/go/softwaredepot

Type ipf offline in the Search bar and select the latest version listed (dates are indicated in the listed product names).

Burn your own CD locally after downloading a master ISO image of the IPF Offline Diagnostics and Utilities CD from the following website:

http://www.hp.com/support/itaniumservers

Select your server product from the list provided.

From the HP Support page, select “Download drivers and software”.

From the “Download drivers and software page”, select “Cross operating system (BIOS, Firmware, Diagnostics, etc)”.

Download the Offline Diagnostics and Utilities software. Previous versions of the software might be listed along with the current (latest) version. Be sure to select the latest version.

Alternatively, you can select the appropriate Offline Diagnostics and Utilities link under the Description heading on this web page. Then you can access the installation instructions and release notes as well as download the software. The README text file on the CD also includes information about how to install the software and update the firmware.

Burn the full ISO image onto a blank CD, using a CD burner and any major CD burning software. To complete the recording process, see the operating instructions provided by your CD burner software. The downloaded CD data is a single ISO image file. This image file must be burned directly to a CD exactly as is. This creates a dual-partition, bootable CD.

For OpenVMS Integrity servers Version 8.2, the process of setting up an FC boot device required using the OpenVMS Integrity servers Boot Manager utility (SYS$MANAGER:BOOT_OPTIONS.COM) to specify values to the EFI Boot Manager. Starting with OpenVMS Integrity servers Version 8.2-1, this process is automated by the OpenVMS Integrity servers installation and upgrade procedures.

The OpenVMS Integrity servers installation/upgrade procedure displays the name of an FC disk as a boot device and prompts you to add the Boot Option. HP recommends that you accept this default. Alternatively, after the installation or upgrade completes, you can run the OpenVMS Integrity servers Boot Manager to set up or modify an FC boot device, as described in the following steps. Always use the OpenVMS Integrity servers installation/upgrade procedure or the OpenVMS Integrity servers Boot Manager to set up or modify an FC boot device; do not use EFI for this purpose.

NOTE: On certain entry-level servers, if no FC boot device is listed in the EFI boot menu, you might experience a delay in EFI initialization because the entire SAN is scanned. Depending on the size of the SAN, this delay might range from several seconds to several minutes. Cell-based systems (such as rx7620, rx8620, and the Superdome) are not affected by this delay. When booting OpenVMS from the installation DVD for the first time on any OpenVMS Integrity servers system, you might also experience a similar delay in EFI initialization. | |

If you did not allow the OpenVMS Integrity servers installation or upgrade procedure to automatically set up your FC boot device, or if you want to modify the boot option that was set up for that device, use the OpenVMS Integrity servers Boot Manager utility, by following these steps:

If your operating system is not running, access the OpenVMS DCL triple dollar sign prompt ($$$) from the OpenVMS operating system main menu by choosing option 8 (Execute DCL commands and procedures). Otherwise, skip to the next step.

At the DCL prompt, enter the following command to start the OpenVMS Integrity servers Boot Manager utility:

$$$@SYS$MANAGER:BOOT_OPTIONSWhen the utility is launched, the main menu is displayed. To add your FC system disk as a boot option, enter 1 at the prompt, as in the following example:

OpenVMS I64 Boot Manager Boot Options List Management Utility (1) ADD an entry to the Boot Options list (2) DISPLAY the Boot Options list (3) REMOVE an entry from the Boot Options list (4) MOVE the position of an entry in the Boot Options list (5) VALIDATE boot options and fix them as necessary (6) Modify Boot Options TIMEOUT setting (B) Set to operate on the Boot Device Options list (D) Set to operate on the Dump Device Options list (G) Set to operate on the Debug Device Options list (E) EXIT from the Boot Manager utility You can also enter Ctrl-Y at any time to abort this utilityEnter your choice:1NOTE: While using this utility, you can change a response made to an earlier prompt by entering the caret (^) character as many times as needed. To end and return to the DCL prompt, press Ctrl/Y.

The utility prompts you for the device name. Enter the FC system disk device you are using for this installation. In the following example, the device is a multipath FC device named $1$DGA1:. This ensures that the system will be able to boot even if a path has failed.

Enter the device name (enter "?" for a list of devices):$1$DGA1:The utility prompts you for the position you want your entry to take in the EFI boot option list. Enter 1 to enable automatic reboot, as in the following example:

Enter the desired position number (1,2,3,,,) of the entry. To display the Boot Options list, enter "?" and press Return. Position [1]:1The utility prompts you for OpenVMS boot flags. By default, no flags are set. Enter the OpenVMS flags (for example, 0,1), or accept the default (NONE) to set no flags, as in the following example:

Enter the value for VMS_FLAGS in the form n,n. VMS_FLAGS [NONE]:The utility prompts you for a description to include with your boot option entry. By default, the device name is used as the description. You can enter more descriptive information. In the following example, the default is taken:

Enter a short description (do not include quotation marks). Description ["$1$DGA1"]:efi$bcfg: $1$dga1 (Boot0001) Option successfully added efi$bcfg: $1$dga1 (Boot0002) Option successfully added efi$bcfg: $1$dga1 (Boot0003) Option successfully added efi$bcfg: $1$dga1 (Boot0004) Option successfully addedThis display example shows four different FC boot paths were configured for your FC system disk.

After you successfully add your boot option, exit the utility by entering E at the prompt.

Enter your choice:ELog out from the DCL level and shut down the Integrity servers system.

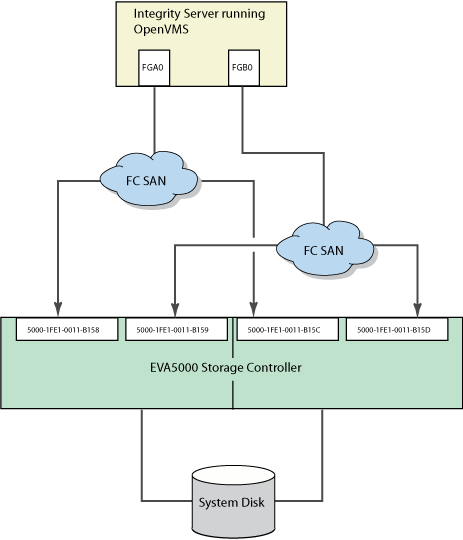

When you next see the boot option list displayed at your console by EFI, it should look similar to the following (assuming you took the default in step 7). In this example, the device is $1$DGA1 for two dual-ported EVA5000 storage arrays (the four separate boot paths are identified in the display). Figure 1 illustrates the host FC ports (FGA0 and FGB0) on the Integrity servers and the corresponding FC SAN/EVA5000 storage controller configuration.

Please select a boot option $1$dga1 FGA0.5000-1FE1-0011-B15C $1$dga1 FGA0.5000-1FE1-0011-B158 $1$dga1 FGB0.5000-1FE1-0011-B15D $1$dga1 FGB0.5000-1FE1-0011-B159 EFI Shell [Built-in]The text to the right of $1$dga1 identifies the boot path from the host adapter to the storage controller, where:

FGA0 or FGB0 are the FC ports (also known as host adapters).

Each 5000-1FE1-0011-B15

nnumber (wherenis C, 8, D, or 9, respectively) is the factory-assigned FC storage port 64-bit worldwide identifier (WWID), otherwise known as the FC port name.

If you get confused, simply boot the OpenVMS Integrity servers OE DVD and use the OpenVMS Integrity servers Boot Manager utility to remove the current boot options (option 3) and then to add your boot options again.

For more information about this utility, see the HP OpenVMS System Manager's Manual, Volume 1: Essentials.

Boot the FC system disk by selecting the appropriate boot option from the EFI Boot Manager menu and pressing Enter. If your FC boot path is the first option in the menu, it might boot automatically after the countdown timer expires.

If you have booted the OpenVMS Integrity servers OE DVD and installed the operating system onto an FC (SAN) disk and configured the system to operate in an OpenVMS Cluster environment, you can configure additional Integrity server systems to boot into the OpenVMS Cluster by following these steps:

Run the cluster configuration utility on the initial cluster system to add the new node to the cluster. On an OpenVMS Alpha system, run the utility by entering the following command:

$@SYS$MANAGER:CLUSTER_CONFIG_LANOn an OpenVMS Integrity servers system, run the utility by entering the following command:

$@SYS$MANAGER:CLUSTER_CONFIG_LANBoot the HP OpenVMS Integrity servers OE DVD on the target node (the new node).

Select option 8 from the operating system menu to access OpenVMS DCL.

Start the OpenVMS Integrity servers Boot Manager utility by entering the following command at the DCL prompt:

NOTE: The OpenVMS Integrity servers Boot Manager utility requires the shared FC disk be mounted. If the shared FC disk is not mounted clusterwide, the utility tries to mount the disk with a /NOWRITE option. If the shared FC disk is already mounted clusterwide, user intervention is required.

$$$@SYS$MANAGER:BOOT_OPTIONSUse the utility to add a new entry for the shared cluster system disk. Follow the instructions provided in Section .